The Ai4 conference in Las Vegas offered a snapshot of where the AI industry stands today. The sessions navigated between foundational concepts and emerging applications, with most content falling into familiar software engineering patterns dressed in modern AI terminology.

Most sessions clustered around two approaches: broad conceptual overviews for newcomers and detailed enterprise implementations with narrow applicability. This distribution suggests an industry still establishing its knowledge base while practitioners work through real-world challenges. Everyone is closing this gap right now, catching up with AI agents and developing new frameworks.

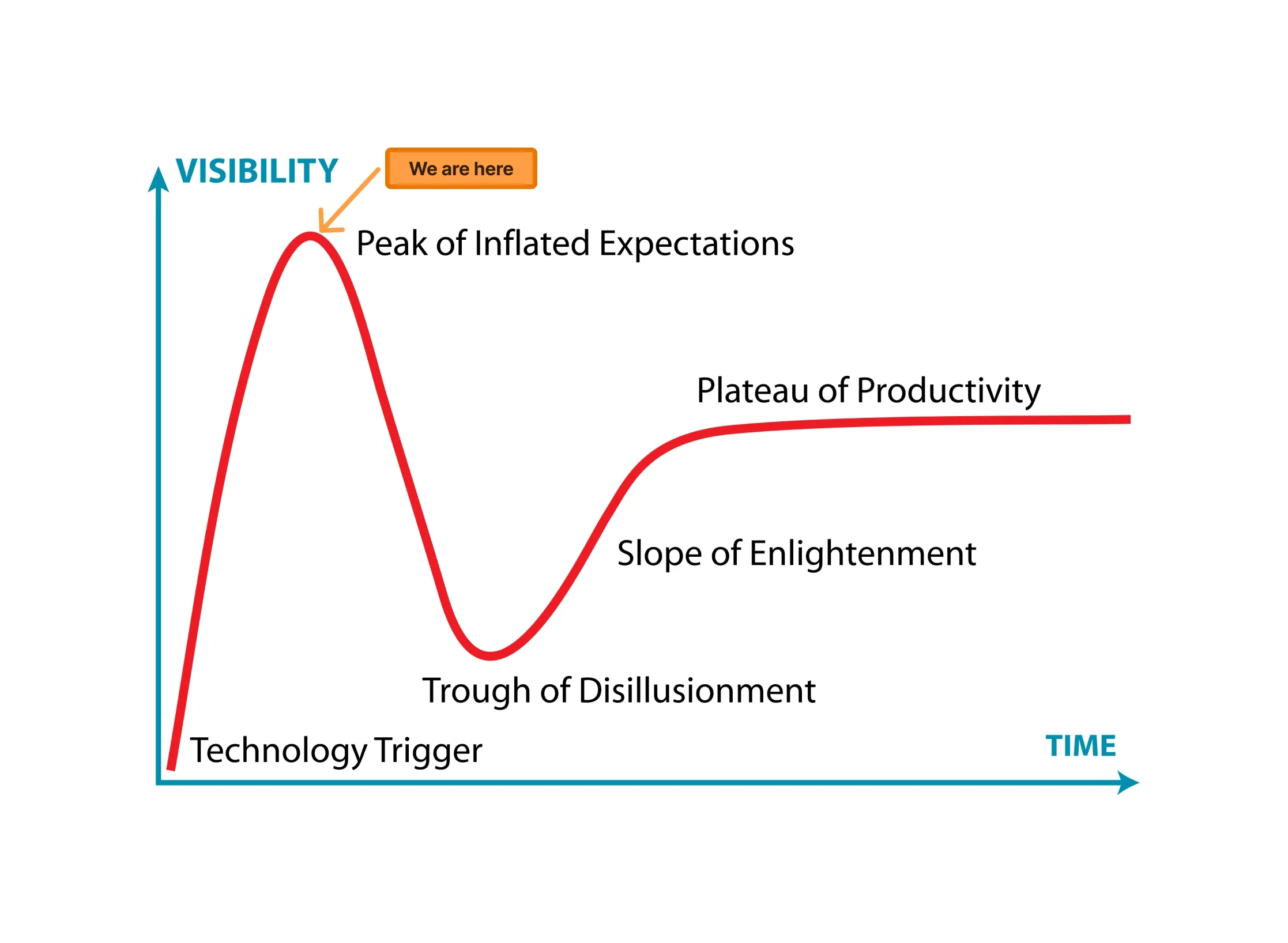

Industry status - peak hype and establishing fundamentals

AI Gartner Hype Cycle

AI Gartner Hype Cycle

We are at the peak of the Gartner Hype Cycle that we’ve seen multiple times in technology. The conference agenda confirmed this and revealed how AI development maps onto established software engineering practices:

- “How to hire best AI talent” centers on hiring strong developers. The core requirements remain consistent: solid engineering fundamentals, system design capabilities, and domain knowledge. AI-specific skills layer on top of these foundations rather than replacing them.

- “What is RAG and knowledge representation” follows familiar architectural patterns seen in MVC frameworks. The concepts translate directly: data layer, processing layer, presentation layer. Understanding these parallels helps teams apply existing architectural knowledge to AI systems.

- “What are evals” addresses testing methodologies for AI systems. The testing pyramid concept applies here, though probabilistic outputs introduce new complexity. Teams familiar with comprehensive testing strategies have a significant advantage.

- “How to blend everything together” focuses on AI application development using proven software engineering practices. The integration challenges mirror those found in any distributed system with additional considerations for model behavior.

- “Model hosting” addresses deployment strategies with additional constraints around GPU requirements and latency considerations. The infrastructure decisions follow established patterns with new hardware requirements.

- “How to measure value” tackles ROI calculation, a persistent challenge across all software projects. AI systems add complexity through their probabilistic nature, but the fundamental business value questions remain the same.

Ai4 gathered 8000+ attendees

Ai4 gathered 8000+ attendees

AI financial value

Standard ROI calculation applies: ROI = (net profit/costs) * 100. The challenge lies in accurately measuring both sides of this equation for AI systems as they are unpredictable, like traditional software development.

Cost components:

- Infrastructure (GPU/CPU compute, storage)

- Model licensing or training expenses

- Development and maintenance overhead

- Operational monitoring and optimization

Profit measurement complexity:

- Quality improvements are often subjective (think about ChatGPT 5)

- Business impact may be indirect (reduced support tickets, faster decision-making)

- Value attribution becomes difficult when AI augments human work rather than replacing it

Ai4 Keynote

Ai4 Keynote

Evaluation challenges

The industry lacks a robust framework for evaluating multi-turn conversations. Unlike single-shot tests where you compare input/output against expected results, conversations involve context accumulation and dynamic goals across multiple exchanges. This is an opportunity to build a framework and conquer the market.

Current approaches each have fundamental limitations:

- Human annotations evals: expensive, slow, inconsistent at scale

- LLM-as-a-Judge evals: fast but biased, struggles with subjective quality

- Automated or code evals: works for narrow metrics, misses conversational coherence

Without robust measurement frameworks, teams optimize for metrics that poorly correlate with actual user experience. This evaluation gap creates an opportunity for new frameworks to emerge.

Ai4 Las Vegas views

Ai4 Las Vegas views

Worth exploring

Despite the hype fatigue with AI I found a few areas are worth exploring and some interesting tools and approaches:

- Model quantization - practical approaches to compress models without significant quality loss, making deployment more economical.

- GraphRAG - moving beyond simple vector similarity to understanding relationships and hierarchies in knowledge representation.

- RedHat LLM Performance Benchmark - demonstrates serving compressed models with reduced GPU requirements while maintaining performance.

- WebArena - self-hostable web environment for testing AI agents across realistic websites and complex web interactions.

- LATTE - vision-language model that separates perception from reasoning, showing 10-16% improvements on visual reasoning benchmarks.

- xLAM Models - Large Action Models (1B-141B parameters) designed to empower AI agent systems with function calling capabilities.

- MCPEval - open-source framework for comprehensive LLM agent evaluation using Model Context Protocol.

- LLM to SLM optimization - distilling large models into smaller, specialized versions that maintain performance while reducing compute requirements.

Ai4 exhibition

Ai4 exhibition

Industry perspective

The AI industry is working through fundamental software engineering challenges while adapting to new constraints around model behavior, evaluation complexity and resource requirements. This mirrors the trajectory of any emerging technology field, especially at the top of the Gartner Hype Cycle. Advances happen in implementation details, evaluation frameworks and deployment optimization and there are definitely tons of opportunities for everyone to build new software, frameworks and models.