AWS Summit DC 2025 Keynote

AWS Summit DC 2025 Keynote

I spent a couple of days at AWS Summit 2025 in Washington DC. Here’s what I learned about AI trends.

Amazon Q Business

Amazon Q Business is a smart AI assistant for work. It helps people be more productive without needing to learn complex AI tools.

What makes Q Business special is how easy it is to use. Anyone can create chatbots that connect to company data like SharePoint, Salesforce, or databases. You don’t need coding skills or AI expertise.

Key benefits:

- Create AI chatbots without writing code

- Works with existing login systems (Active Directory, SAML)

- Choose from different AI models in AWS Marketplace

- Control who can access what information

- Built-in safety features and compliance rules

- Works for both internal teams and customers

Links:

AI-Powered Search

Search is getting smarter. Instead of just matching keywords, AI can now understand what you really mean when you search. The presentation showed how search evolved from simple keyword matching to semantic search, then hybrid search, conversational search and finally AI agent-powered search, with each step getting more intelligent.

Amazon OpenSearch uses AI models like BERT to understand text better. It turns words into numbers that understand meaning, not just matching words. This works great with RAG (Retrieval Augmented Generation), where search results help AI give better answers.

The technical foundation uses inverted indexes and vector spaces to understand document relationships. Traditional search creates simple indexes, but modern AI search maps documents in multi-dimensional vector spaces where similar concepts cluster together, using techniques like Euclidean and cosine distance to find relevant results.

What this means for businesses:

- Find documents by meaning, not just keywords

- Ask questions in normal language

- Search across different types of data at once

- Get personalized results based on your work

Modern applications now prefer giving direct answers instead of just showing search results. The new approach uses RAG (Retrieval Augmented Generation) where the system searches a knowledge base, retrieves relevant documents, feeds them to a language model and generates a final answer. With AWS Bedrock Agents, AI can search multiple databases, combine information and answer complex questions automatically.

Links:

- GitHub Generative AI-powered search with Amazon OpenSearch Service

- Semantic and Vector Search with Amazon OpenSearch Service Workshop

- Unified User Experiences with Hierarchical Multi-Agent Collaboration

- Amazon Bedrock Agents

- Open Search Plugins and approaches

AWS Summit DC 2025 Expo Zone

AWS Summit DC 2025 Expo Zone

vLLM: Fast AI Model Serving

vLLM is a tool that makes AI models run faster and cheaper. UC Berkeley created it to fix a problem: AI models need too much memory and run slowly.

The main innovation is called PagedAttention. It manages memory much better than older methods. This means you can serve 2-4x more requests with the same hardware.

For AWS users, vLLM works well with Inferentia and Trainium chips, which cost less than regular GPUs. It supports many optimization techniques to make models smaller and faster.

Key benefits:

- Works with OpenAI API (easy to integrate)

- Scales across multiple GPUs

- Faster responses for time-sensitive apps

- Uses memory more efficiently

Links:

- GitHub vLLM

- Generative AI on Amazon EKS Workshop

- Serving LLMs using vLLM and Amazon EC2 instances with AWS AI chips

AWS Summit DC 2025 Overlook

AWS Summit DC 2025 Overlook

NVIDIA AI Platform

NVIDIA is no longer just a graphics card company. They now provide a complete AI platform with hardware, software and tools for the entire AI development process. Their infrastructure diagram showed a complete stack: applications at the top (like finance, healthcare and robotics), platforms and acceleration services in the middle, system software layer and hardware foundation with different GPU types (H100, A100, etc.) at the bottom.

The NeMo framework is their main tool for building conversational AI. It trains models on thousands of GPUs and connects to AWS services like EKS and Bedrock.

What NVIDIA offers:

- Complete AI development environment

- Advanced training techniques for large models

- Enterprise security features

- Works across different cloud providers (AWS, Google Cloud, Azure)

They showed how to create organized reports using LangChain and NVIDIA’s API. This helps process documents, do analysis and create content automatically.

Links:

- GitHub NVIDIA NeMo project

- LangChain Structured Report Generation

- Structured Report Generation Cookbook Jupyter Notebook

- GitHub NVIDIA NIM Deploy

Main Takeaways

The summit showed four big trends in business AI:

- AI is getting easier to use: Tools like vLLM and AWS Inferentia make high-performance AI affordable

- Anyone can build AI tools: Platforms like Amazon Q Business let non-technical people create AI solutions

- Smart search is becoming normal: Vector search and RAG are now standard for company knowledge systems

- AI agents do complex work: AWS Bedrock Agents can handle multi-step business processes automatically

These changes mean AI is becoming basic infrastructure, like email or databases, rather than special tools. This will change how all knowledge workers do their jobs.

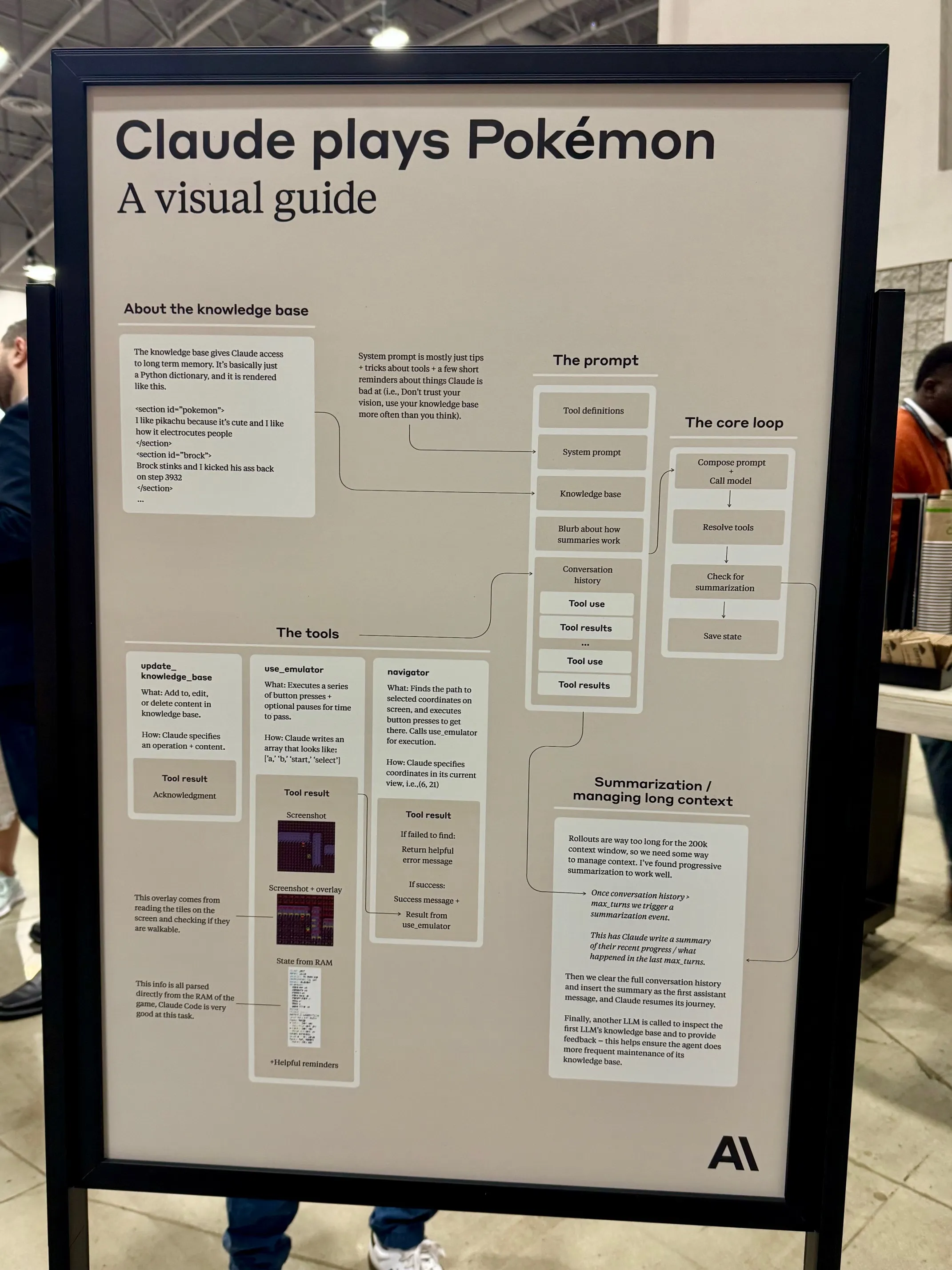

Claude AI demonstration: Multimodal AI capabilities in action

Claude AI demonstration: Multimodal AI capabilities in action